Something I see too often in both immature data science practitioners and data science teams is overcomplication. The desire to use complex neural networks when there isn't enough data to support them, or wanting to include all kinds of variables that don't actually add predictive value.

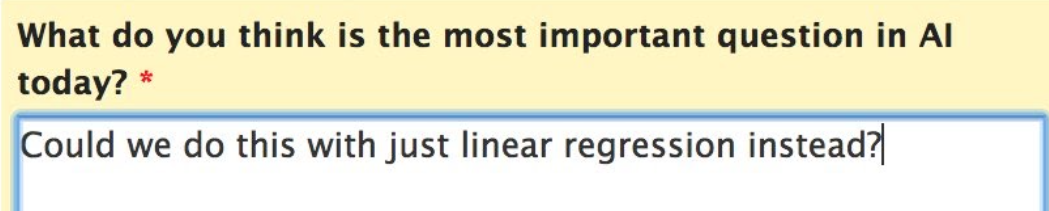

Ironically, using a simple solution is often a sign of sophistication. The experienced data scientists I know will know when complexity is warranted, but at the end of the day, simple often produces the optimal point for the effort:reward ratio.

For inexperienced data practitioners, the reason for unnecessary complexity is obvious: it's a combination of wanting to demonstrate you know complex methods, and the lack of experience to know when enough is enough. That can be fine when building up a portfolio and gaining experience.

In immature data science teams, the issue is usually a more serious problem. It leads to what I'm calling Data Bullshit: data science projects that are overly complicated for the sake of sounding complicated.

Data Naivete Leads to Perverse Incentives

One of my previous bosses would always brag about how the models our team was making "included thousands of variables". This was true. What he didn't say was that we could get the same model performance with a tiny fraction of those variables. The additional variables were just bloat, which caused complications in extracting all of the data elements, unnecessary complexities in the code making it harder to maintain, not to mention added literally months to development time.

There was a ton of low-hanging fruit, places we could be adding tremendous value with small, properly targeted projects, but none of them sounded spectacular enough. It was easier to get a spotlight if he claimed we were doing things that sounded super sophisticated, that involved skills that no other team had, even if the simple stuff would have worked just as well.

This is a pattern I see repeated: a leader who only knows enough to be dangerous, a data naive organization where people can't tell what's bullshit, and there are incentives to justify a data science team because of their expensive data science salaries.

Widespread data naivete in an organization, where people are wowed by the talk of statistical models and machine learning sounds like magic, results in these perverse incentives - especially when there is data naivete at the executive level. If the people at the top don't know how to smell out data bullshit, they're going to be wowed by the complexity of the solution, and incorrectly think the complexity was required, justifying the expensive team. In reality, a simpler solution would be better and more quickly implemented - but those solutions are too easy to explain, and therefore don't show how the data science team is "special" for having come up with it (even though it often takes more experience to spot where a simple solution can be used than it takes to throw the kitchen sink at a problem).

This trend of unnecessarily overly complicated methods reached an extreme at this organization when one executive suggested we capture sound data at some of our sites to see if there were patterns between compliance issues and sound features.

This was going to be a huge logistical nightmare - literally planting recording hardware in thousands of sites, building data pipelines to collect and process the data, dealing with potential legal issues of placing recording devices, collecting data for months just to get a handful of positive data points, etc. There was going to be so much variance in the data due to dumb factors like where the device was placed in a site, and it was clear to every data literate person that there was no way the data was going to be helpful for this classification.

More importantly, there were plenty of other ways of getting more relevant data that wasn't currently being used - one of the big things they wanted to look for in the noise data was the sounding of alarms, but we could just pull alarm triggers more directly without needing any hardware! But none of that was good enough, because this executive thought this idea would sound good. And it did - he gave talks about this idea and drew a lot of attention for his innovative thinking.

I was one of two data scientists on the team assigned to this project. We both knew it was doomed to failure but couldn't convince the data illiterate leadership. It lowered morale and took a huge amount of our time and resources. I ended up leaving the company, and was happy to wash my hands of the project.

Conclusion

Data illiteracy leads to data bullshit. Leadership in a company using data science needs either to be data literate, or be willing to listen to those that are. Otherwise, you're going to end up with time-wasting projects, lose your best data scientists, and the projects that are completed are going to be overly complicated messes.

Comments !